Read the first part of this tutorial: Text feature extraction (tf-idf) – Part I.

This post is a continuation of the first part where we started to learn the theory and practice about text feature extraction and vector space model representation. I really recommend you to read the first part of the post series in order to follow this second post.

Since a lot of people liked the first part of this tutorial, this second part is a little longer than the first.

Introduction

In the first post, we learned how to use the term-frequency to represent textual information in the vector space. However, the main problem with the term-frequency approach is that it scales up frequent terms and scales down rare terms which are empirically more informative than the high frequency terms. The basic intuition is that a term that occurs frequently in many documents is not a good discriminator, and really makes sense (at least in many experimental tests); the important question here is: why would you, in a classification problem for instance, emphasize a term which is almost present in the entire corpus of your documents ?

The tf-idf weight comes to solve this problem. What tf-idf gives is how important is a word to a document in a collection, and that’s why tf-idf incorporates local and global parameters, because it takes in consideration not only the isolated term but also the term within the document collection. What tf-idf then does to solve that problem, is to scale down the frequent terms while scaling up the rare terms; a term that occurs 10 times more than another isn’t 10 times more important than it, that’s why tf-idf uses the logarithmic scale to do that.

But let’s go back to our definition of the which is actually the term count of the term

in the document

. The use of this simple term frequency could lead us to problems like keyword spamming, which is when we have a repeated term in a document with the purpose of improving its ranking on an IR (Information Retrieval) system or even create a bias towards long documents, making them look more important than they are just because of the high frequency of the term in the document.

To overcome this problem, the term frequency of a document on a vector space is usually also normalized. Let’s see how we normalize this vector.

Vector normalization

Suppose we are going to normalize the term-frequency vector that we have calculated in the first part of this tutorial. The document

from the first part of this tutorial had this textual representation:

d4: We can see the shining sun, the bright sun.

And the vector space representation using the non-normalized term-frequency of that document was:

To normalize the vector, is the same as calculating the Unit Vector of the vector, and they are denoted using the “hat” notation: . The definition of the unit vector

of a vector

is:

Where the is the unit vector, or the normalized vector, the

is the vector going to be normalized and the

is the norm (magnitude, length) of the vector

in the

space (don’t worry, I’m going to explain it all).

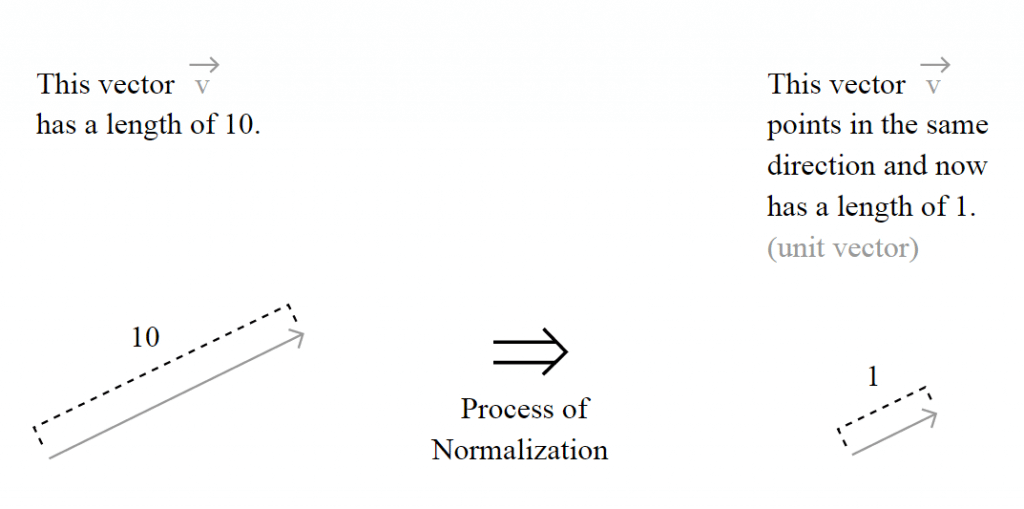

The unit vector is actually nothing more than a normalized version of the vector, is a vector which the length is 1.

But the important question here is how the length of the vector is calculated and to understand this, you must understand the motivation of the spaces, also called Lebesgue spaces.

Lebesgue spaces

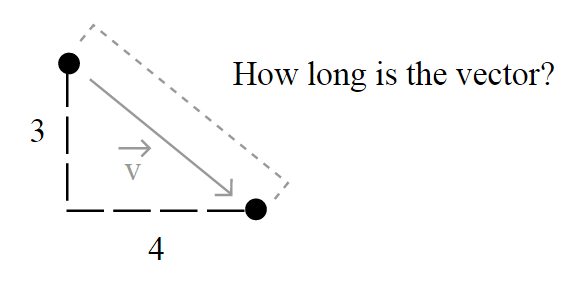

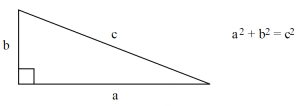

Usually, the length of a vector is calculated using the Euclidean norm – a norm is a function that assigns a strictly positive length or size to all vectors in a vector space -, which is defined by:

But this isn’t the only way to define length, and that’s why you see (sometimes) a number together with the norm notation, like in

. That’s because it could be generalized as:

and simplified as:

So when you read about a L2-norm, you’re reading about the Euclidean norm, a norm with , the most common norm used to measure the length of a vector, typically called “magnitude”; actually, when you have an unqualified length measure (without the

number), you have the L2-norm (Euclidean norm).

When you read about a L1-norm, you’re reading about the norm with , defined as:

Which is nothing more than a simple sum of the components of the vector, also known as Taxicab distance, also called Manhattan distance.

Taxicab geometry versus Euclidean distance: In taxicab geometry all three pictured lines have the same length (12) for the same route. In Euclidean geometry, the green line has length , and is the unique shortest path.

Source: Wikipedia :: Taxicab Geometry

Note that you can also use any norm to normalize the vector, but we’re going to use the most common norm, the L2-Norm, which is also the default in the 0.9 release of the scikits.learn. You can also find papers comparing the performance of the two approaches among other methods to normalize the document vector, actually you can use any other method, but you have to be concise, once you’ve used a norm, you have to use it for the whole process directly involving the norm (a unit vector that used a L1-norm isn’t going to have the length 1 if you’re going to take its L2-norm later).

Back to vector normalization

Now that you know what the vector normalization process is, we can try a concrete example, the process of using the L2-norm (we’ll use the right terms now) to normalize our vector in order to get its unit vector

. To do that, we’ll simple plug it into the definition of the unit vector to evaluate it:

And that is it ! Our normalized vector has now a L2-norm

.

Note that here we have normalized our term frequency document vector, but later we’re going to do that after the calculation of the tf-idf.

The term frequency – inverse document frequency (tf-idf) weight

Now you have understood how the vector normalization works in theory and practice, let’s continue our tutorial. Suppose you have the following documents in your collection (taken from the first part of tutorial):

Train Document Set: d1: The sky is blue. d2: The sun is bright. Test Document Set: d3: The sun in the sky is bright. d4: We can see the shining sun, the bright sun.

Your document space can be defined then as where

is the number of documents in your corpus, and in our case as

and

. The cardinality of our document space is defined by

and

, since we have only 2 two documents for training and testing, but they obviously don’t need to have the same cardinality.

Let’s see now, how idf (inverse document frequency) is then defined:

where is the number of documents where the term

appears, when the term-frequency function satisfies

, we’re only adding 1 into the formula to avoid zero-division.

The formula for the tf-idf is then:

and this formula has an important consequence: a high weight of the tf-idf calculation is reached when you have a high term frequency (tf) in the given document (local parameter) and a low document frequency of the term in the whole collection (global parameter).

Now let’s calculate the idf for each feature present in the feature matrix with the term frequency we have calculated in the first tutorial:

Since we have 4 features, we have to calculate ,

,

,

:

These idf weights can be represented by a vector as:

Now that we have our matrix with the term frequency () and the vector representing the idf for each feature of our matrix (

), we can calculate our tf-idf weights. What we have to do is a simple multiplication of each column of the matrix

with the respective

vector dimension. To do that, we can create a square diagonal matrix called

with both the vertical and horizontal dimensions equal to the vector

dimension:

and then multiply it to the term frequency matrix, so the final result can be defined then as:

Please note that the matrix multiplication isn’t commutative, the result of will be different than the result of the

, and this is why the

is on the right side of the multiplication, to accomplish the desired effect of multiplying each idf value to its corresponding feature:

Let’s see now a concrete example of this multiplication:

And finally, we can apply our L2 normalization process to the matrix. Please note that this normalization is “row-wise” because we’re going to handle each row of the matrix as a separated vector to be normalized, and not the matrix as a whole:

And that is our pretty normalized tf-idf weight of our testing document set, which is actually a collection of unit vectors. If you take the L2-norm of each row of the matrix, you’ll see that they all have a L2-norm of 1.

Python practice

Environment Used: Python v.2.7.2, Numpy 1.6.1, Scipy v.0.9.0, Sklearn (Scikits.learn) v.0.9.

Now the section you were waiting for ! In this section I’ll use Python to show each step of the tf-idf calculation using the Scikit.learn feature extraction module.

The first step is to create our training and testing document set and computing the term frequency matrix:

from sklearn.feature_extraction.text import CountVectorizer

train_set = ("The sky is blue.", "The sun is bright.")

test_set = ("The sun in the sky is bright.",

"We can see the shining sun, the bright sun.")

count_vectorizer = CountVectorizer()

count_vectorizer.fit_transform(train_set)

print "Vocabulary:", count_vectorizer.vocabulary

# Vocabulary: {'blue': 0, 'sun': 1, 'bright': 2, 'sky': 3}

freq_term_matrix = count_vectorizer.transform(test_set)

print freq_term_matrix.todense()

#[[0 1 1 1]

#[0 2 1 0]]

Now that we have the frequency term matrix (called freq_term_matrix), we can instantiate the TfidfTransformer, which is going to be responsible to calculate the tf-idf weights for our term frequency matrix:

from sklearn.feature_extraction.text import TfidfTransformer tfidf = TfidfTransformer(norm="l2") tfidf.fit(freq_term_matrix) print "IDF:", tfidf.idf_ # IDF: [ 0.69314718 -0.40546511 -0.40546511 0. ]

Note that I’ve specified the norm as L2, this is optional (actually the default is L2-norm), but I’ve added the parameter to make it explicit to you that it it’s going to use the L2-norm. Also note that you can see the calculated idf weight by accessing the internal attribute called idf_. Now that fit() method has calculated the idf for the matrix, let’s transform the freq_term_matrix to the tf-idf weight matrix:

tf_idf_matrix = tfidf.transform(freq_term_matrix) print tf_idf_matrix.todense() # [[ 0. -0.70710678 -0.70710678 0. ] # [ 0. -0.89442719 -0.4472136 0. ]]

And that is it, the tf_idf_matrix is actually our previous matrix. You can accomplish the same effect by using the Vectorizer class of the Scikit.learn which is a vectorizer that automatically combines the CountVectorizer and the TfidfTransformer to you. See this example to know how to use it for the text classification process.

I really hope you liked the post, I tried to make it simple as possible even for people without the required mathematical background of linear algebra, etc. In the next Machine Learning post I’m expecting to show how you can use the tf-idf to calculate the cosine similarity.

If you liked it, feel free to comment and make suggestions, corrections, etc.

References

Understanding Inverse Document Frequency: on theoretical arguments for IDF

The classic Vector Space Model

Sklearn text feature extraction code

Updates

13 Mar 2015 – Formating, fixed images issues.

03 Oct 2011 – Added the info about the environment used for Python examples