So, in mathematics, we have the concept of universality in which we have laws like the law of large numbers, the Benford’s law (that I cited a lot in previous posts), the central limit theorem, and many other laws that act like laws of physics for the world of mathematics. These laws are not our inventions, I mean, the concepts are our inventions but the laws per se are universal, they are true no matter where you are on the earth or if you live far away in the universe. And that is why Frank Drake, one of the founders of SETI and also one of the pioneers in the search for extraterrestrial intelligence came with this brilliant idea of using prime numbers (another example of universality) to communicate with distant worlds. The idea that Frank Drake had was the use of prime numbers to hide (not actually hide, but to make self-evident, you’ll understand later) the dimension of a transmitted image in the image size itself.

So, imagine you are receiving a message that is a sequence of dashes and dots like “—.-.—.-.——–…-.—” that repeats after a short pause and then again and again. Let’s suppose that this message has a size of 1679 symbols. So you begin analyzing the number, which is, in fact, a semiprime number (the same used in cryptography, a number that is a product of two prime numbers) that can be factored in prime factors as 23*73=1679, and this is the only way to factor it in prime factors (actually all numbers have only a single set of prime factors that are unique, see Fundamental theorem of arithmetic). So, since there are only two prime factors, you will try to reshape the signal in a 2D image and this image can have the dimension of 23×73 or 73×23, when you arrange the image in one of these dimensions you’ll see that the image makes sense and the other will be just a random and strange sequence. By using prime numbers (or semiprimes) you just used the total image size to define the only two possible ways of arranging the image dimension.

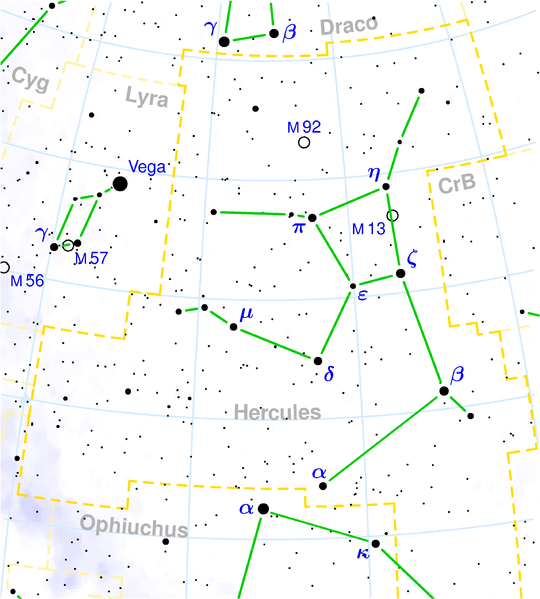

This idea was actually used in reality in 1974 by the Arecibo radio telescope when a message was broadcast in frequency modulation (FM) aiming the M13 globular star cluster at 25.000 light-years away:

This message had the size (surprise) of 1679 binary digits and carried a lot of information about your world like a graphical representation of a human, numbers from 1 to 10, a graphical representation of the Arecibo radio telescope, etc.

The message decoded as 23 rows and 73 columns are this:

As you can see, the message looks a lot nonsensical, but when it is decoded as an image with 73 rows and 23 columns, it will show its real significance:

Amazing, don’t you think ? I hope you liked it !

– Christian S. Perone