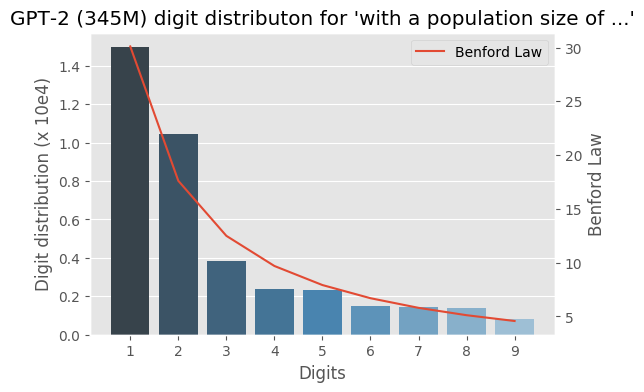

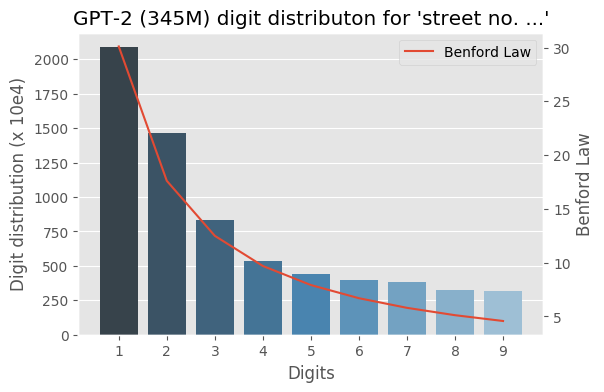

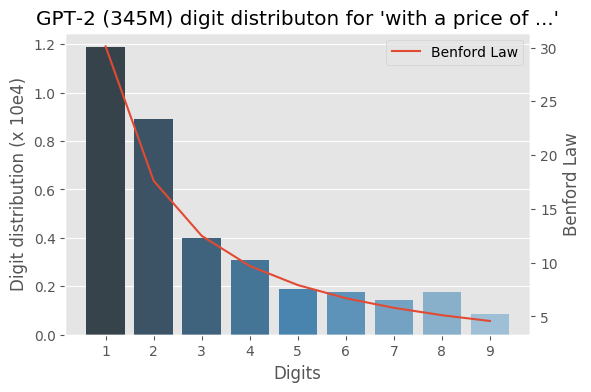

I wrote some months ago about how the Benford law emerges from language models, today I decided to evaluate the same method to check how the GPT-2 would behave with some sentences and it turns out that it seems that it is also capturing these power laws. You can find some plots with the examples below, the plots are showing the probability of the digit given a particular sentence such as “with a population size of”, showing the distribution of: $$P(\{1,2, \ldots, 9\} \vert \text{“with a population size of”})$$ for the GPT-2 medium model (345M):

Cite this article as: Christian S. Perone, "Benford law on GPT-2 language model," in Terra Incognita, 14/06/2019, https://blog.christianperone.com/2019/06/benford-law-on-gpt-2-language-model/.