Deep learning – Convolutional neural networks and feature extraction with Python

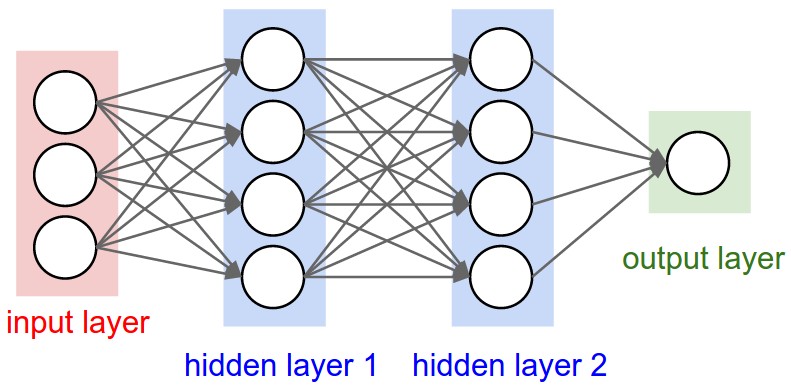

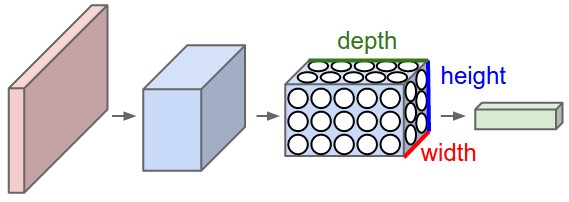

Convolutional neural networks (or ConvNets) are biologically-inspired variants of MLPs, they have different kinds of layers and each different layer works different than the usual MLP layers. If you are interested in learning more about ConvNets, a good course is the CS231n – Convolutional Neural Newtorks for Visual Recognition. The architecture of the CNNs are shown in the images below:

As you can see, the ConvNets works with 3D volumes and transformations of these 3D volumes. I won’t repeat in this post the entire CS231n tutorial, so if you’re really interested, please take time to read before continuing.

Lasagne and nolearn

One of the Python packages for deep learning that I really like to work with is Lasagne and nolearn. Lasagne is based on Theano so the GPU speedups will really make a great difference, and their declarative approach for the neural networks creation are really helpful. The nolearn libary is a collection of utilities around neural networks packages (including Lasagne) that can help us a lot during the creation of the neural network architecture, inspection of the layers, etc.

What I’m going to show in this post, is how to build a simple ConvNet architecture with some convolutional and pooling layers. I’m also going to show how you can use a ConvNet to train a feature extractor and then use it to extract features before feeding them into different models like SVM, Logistic Regression, etc. Many people use pre-trained ConvNet models and then remove the last output layer to extract the features from ConvNets that were trained on ImageNet datasets. This is usually called transfer learning because you can use layers from other ConvNets as feature extractors for different problems, since the first layer filters of the ConvNets works as edge detectors, they can be used as general feature detectors for other problems.

Loading the MNIST dataset

The MNIST dataset is one of the most traditional datasets for digits classification. We will use a pickled version of it for Python, but first, lets import the packages that we will need to use:

import matplotlib import matplotlib.pyplot as plt import matplotlib.cm as cm from urllib import urlretrieve import cPickle as pickle import os import gzip import numpy as np import theano import lasagne from lasagne import layers from lasagne.updates import nesterov_momentum from nolearn.lasagne import NeuralNet from nolearn.lasagne import visualize from sklearn.metrics import classification_report from sklearn.metrics import confusion_matrix

As you can see, we are importing matplotlib for plotting some images, some native Python modules to download the MNIST dataset, numpy, theano, lasagne, nolearn and some scikit-learn functions for model evaluation.

After that, we define our MNIST loading function (this is pretty the same function used in the Lasagne tutorial):

def load_dataset():

url = 'http://deeplearning.net/data/mnist/mnist.pkl.gz'

filename = 'mnist.pkl.gz'

if not os.path.exists(filename):

print("Downloading MNIST dataset...")

urlretrieve(url, filename)

with gzip.open(filename, 'rb') as f:

data = pickle.load(f)

X_train, y_train = data[0]

X_val, y_val = data[1]

X_test, y_test = data[2]

X_train = X_train.reshape((-1, 1, 28, 28))

X_val = X_val.reshape((-1, 1, 28, 28))

X_test = X_test.reshape((-1, 1, 28, 28))

y_train = y_train.astype(np.uint8)

y_val = y_val.astype(np.uint8)

y_test = y_test.astype(np.uint8)

return X_train, y_train, X_val, y_val, X_test, y_test

As you can see, we are downloading the MNIST pickled dataset and then unpacking it into the three different datasets: train, validation and test. After that we reshape the image contents to prepare them to input into the Lasagne input layer later and we also convert the numpy array types to uint8 due to the GPU/theano datatype restrictions.

After that, we’re ready to load the MNIST dataset and inspect it:

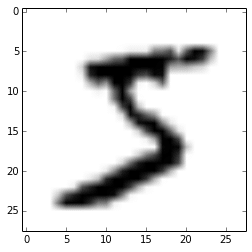

X_train, y_train, X_val, y_val, X_test, y_test = load_dataset() plt.imshow(X_train[0][0], cmap=cm.binary)

This code above will output the following image (I’m using IPython Notebook):

ConvNet Architecture and Training

Now we can define our ConvNet architecture and then train it using a GPU/CPU (I have a very cheap GPU, but it helps a lot):

net1 = NeuralNet(

layers=[('input', layers.InputLayer),

('conv2d1', layers.Conv2DLayer),

('maxpool1', layers.MaxPool2DLayer),

('conv2d2', layers.Conv2DLayer),

('maxpool2', layers.MaxPool2DLayer),

('dropout1', layers.DropoutLayer),

('dense', layers.DenseLayer),

('dropout2', layers.DropoutLayer),

('output', layers.DenseLayer),

],

# input layer

input_shape=(None, 1, 28, 28),

# layer conv2d1

conv2d1_num_filters=32,

conv2d1_filter_size=(5, 5),

conv2d1_nonlinearity=lasagne.nonlinearities.rectify,

conv2d1_W=lasagne.init.GlorotUniform(),

# layer maxpool1

maxpool1_pool_size=(2, 2),

# layer conv2d2

conv2d2_num_filters=32,

conv2d2_filter_size=(5, 5),

conv2d2_nonlinearity=lasagne.nonlinearities.rectify,

# layer maxpool2

maxpool2_pool_size=(2, 2),

# dropout1

dropout1_p=0.5,

# dense

dense_num_units=256,

dense_nonlinearity=lasagne.nonlinearities.rectify,

# dropout2

dropout2_p=0.5,

# output

output_nonlinearity=lasagne.nonlinearities.softmax,

output_num_units=10,

# optimization method params

update=nesterov_momentum,

update_learning_rate=0.01,

update_momentum=0.9,

max_epochs=10,

verbose=1,

)

# Train the network

nn = net1.fit(X_train, y_train)

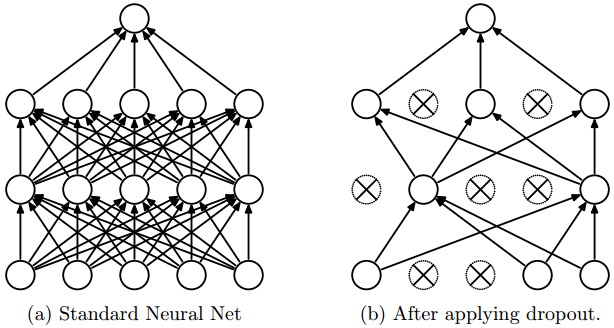

As you can see, in the parameter layers we’re defining a dictionary of tuples with the layer names/types and then we define the parameters for these layers. Our architecture here is using two convolutional layers with poolings and then a fully connected layer (dense layer) and the output layer. There are also dropouts between some layers, the dropout layer is a regularizer that randomly sets input values to zero to avoid overfitting (see the image below).

After calling the train method, the nolearn package will show status of the learning process, in my machine with my humble GPU I got the results below:

# Neural Network with 160362 learnable parameters

## Layer information

# name size

--- -------- --------

0 input 1x28x28

1 conv2d1 32x24x24

2 maxpool1 32x12x12

3 conv2d2 32x8x8

4 maxpool2 32x4x4

5 dropout1 32x4x4

6 dense 256

7 dropout2 256

8 output 10

epoch train loss valid loss train/val valid acc dur

------- ------------ ------------ ----------- --------- ---

1 0.85204 0.16707 5.09977 0.95174 33.71s

2 0.27571 0.10732 2.56896 0.96825 33.34s

3 0.20262 0.08567 2.36524 0.97488 33.51s

4 0.16551 0.07695 2.15081 0.97705 33.50s

5 0.14173 0.06803 2.08322 0.98061 34.38s

6 0.12519 0.06067 2.06352 0.98239 34.02s

7 0.11077 0.05532 2.00254 0.98427 33.78s

8 0.10497 0.05771 1.81898 0.98248 34.17s

9 0.09881 0.05159 1.91509 0.98407 33.80s

10 0.09264 0.04958 1.86864 0.98526 33.40s

As you can see, the accuracy in the end was 0.98526, a pretty good performance for a 10 epochs training.

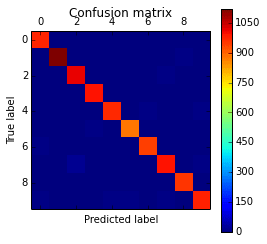

Prediction and Confusion Matrix

Now we can use the model to predict the entire testing dataset:

preds = net1.predict(X_test)

And we can also plot a confusion matrix to check the performance of the neural network classification:

cm = confusion_matrix(y_test, preds)

plt.matshow(cm)

plt.title('Confusion matrix')

plt.colorbar()

plt.ylabel('True label')

plt.xlabel('Predicted label')

plt.show()

The code above will plot the following confusion matrix:

As you can see, the diagonal is where the classification is more dense, showing the good performance of our classifier.

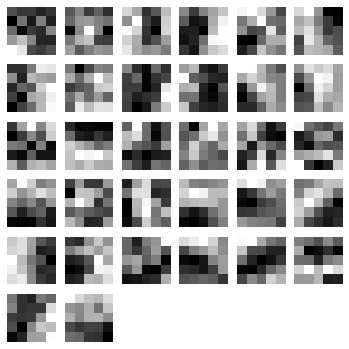

Filters Visualization

We can also visualize the 32 filters from the first convolutional layer:

visualize.plot_conv_weights(net1.layers_['conv2d1'])

The code above will plot the following filters below:

As you can see, the nolearn plot_conv_weights plots all the filters present in the layer we specified.

Theano layer functions and Feature Extraction

Now it is time to create theano-compiled functions that will feed-forward the input data into the architecture up to the layer you’re interested. I’m going to get the functions for the output layer and also for the dense layer before the output layer:

dense_layer = layers.get_output(net1.layers_['dense'], deterministic=True) output_layer = layers.get_output(net1.layers_['output'], deterministic=True) input_var = net1.layers_['input'].input_var f_output = theano.function([input_var], output_layer) f_dense = theano.function([input_var], dense_layer)

As you can see, we have now two theano functions called f_output and f_dense (for the output and dense layers). Please note that in order to get the layers here we are using a extra parameter called “deterministic“, this is to avoid the dropout layers affecting our feed-forward pass.

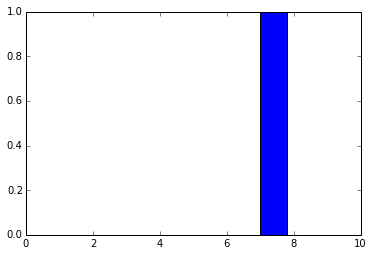

We can now convert an example instance to the input format and then feed it into the theano function for the output layer:

instance = X_test[0][None, :, :] %timeit -n 500 f_output(instance) 500 loops, best of 3: 858 µs per loop

As you can see, the f_output function takes an average of 858 µs. We can also plot the output layer activations for the instance:

pred = f_output(instance) N = pred.shape[1] plt.bar(range(N), pred.ravel())

The code above will create the following plot:

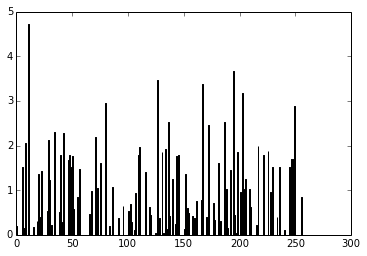

As you can see, the digit was recognized as the digit 7. The fact that you can create theano functions for any layer of the network is very useful because you can create a function (like we did before) to get the activations for the dense layer (the one before the output layer) and you can use these activations as features and use your neural network not as classifier but as a feature extractor. Let’s plot now the 256 unit activations for the dense layer:

pred = f_dense(instance) N = pred.shape[1] plt.bar(range(N), pred.ravel())

The code above will create the following plot below:

You can now use the output of the these 256 activations as features on a linear classifier like Logistic Regression or SVM.

I hope you enjoyed the tutorial !

Thanks for the article! It was very useful — especially the bits about plotting the convolutional layers.

Aside: Pickle can run arbitrary code so it would be good to avoid if possible. I understand it makes the tutorial much easier though :).

Hi,

I am new to Lasagne and starting to implement CNNs. I am facing a problem while storing the trained network parameters. Specifically, I am working on ImageNet.

When I do:

nn = net1.fit(X_train, y_train)

and try to save “nn” by pickle.dump(), I guess I run out of memory.

Can you suggest me a way to store such a big sized Lasagne Object. I tried using JASON and HD5, but they dont support Lasagne Objects.

Thanks,

Avisek

Have you tried using dill: https://pypi.python.org/pypi/dill

ImageNet is sufficiently large to crash Python’s pickle with a maximum recursion error. Therefore you have to increase Python’s recursion limit before we save it:

import sys

sys.setrecursionlimit(10000)

X, y = load2d()

net6.fit(X, y)

import cPickle as pickle

with open(‘net6.pickle’, ‘wb’) as f:

pickle.dump(net6, f, -1)

Thank you for writing this article. I found it very useful for getting up to speed on lasagne and nolearn.

Towards the end you talk about plugging the last layer of the CNN into a SVM. Is that approach similar to what is discussed for Convolutional Kernel Networks: http://yann.lecun.com/exdb/publis/pdf/huang-lecun-06.pdf ? I am very curious to see implementations of this.

I found that removing the first dropout layer improves test accuracy.

heyyy…did you find a method to implement the end part in continuation with this blog?

Thank you for writing this article.

and, I have some errors.

e.g., 1- output_shape = layer.get_output_shape()

AttributeError: ‘DenseLayer’ object has no attribute ‘get_output_shape’

2- from lasagne.objectives import mse

ImportError: cannot import name mse

Hi Kemal, for the second error, probabilly you have an old version of nolearn since mse has been renamed in lasagne as squared_error.

pip install -r https://raw.githubusercontent.com/dnouri/nolearn/master/requirements.txt

pip install git+https://github.com/dnouri/nolearn.git@master#egg=nolearn==0.7.git

Hey,

Article was really good for the beginners . The predictions on the dataset itself were accurate. But when I was trying to give an image that don’t belongs to dataset, it is giving dimensional error. I realized the size and channel were not a match for the input layer so I resize it to 28,28 but still is generating the same error.

this is the error .

TypeError: (‘Bad input argument to theano function with name “/home/raza/workspace/Test/Convnet/demoTest.py:166” at index 0(0-based)’, ‘Wrong number of dimensions: expected 4, got 3 with shape (28, 28, 4).’)

Does anyone receive an error regarding not finding global variables ignore_bmax_pool_2d() and order?

def __init__(self, incoming, pool_size, stride=None, pad=(0, 0),

ignore_border=True, **kwargs):

super(MaxPool2DLayer, self).__init__(incoming,

pool_size,

stride,

pad,

ignore_bmax_pool_2d() order,

mode=’max’,

**kwargs)

secondly, TypeError: max_pool_2d() got an unexpected keyword argument ‘mode’ within the same pool.py file

Let me know if a solution is available.

Hello,

First of all, thank you for the article, it was very helpful.

However, I was wondering if it was normal that after running the code “MNIST Dataset Loading” I obtained a GZ file. Therefore I unziped it and then I obtained a PKL file and I don’t know how to open this file.

Thank you for your help

There is no need to extract that file. As you can see in the load_dataset function, the gzip-module allows to directly access the content of a gz-file.

A pkl-file contains serialized python objects. You can load those objects by means of the pickle-module:

https://docs.python.org/2/library/pickle.html

Thanks you for this great introduction to convnets. Helped me a lot.

I’ve got one question regarding your y_-variables. I expected them to be represented as oneHot-variables (as you have 10 output nodes each representing one digit). Where, for instance, a 2 translates into [0, 0, 1.0, 0, 0, 0, 0, 0, 0, 0].

But you skip that step and the network still works appropriately. How is that possible? Is there some sort of implicit conversion going on the background?

Found the solution myself. My sample code, which is a mix of code from this tutorial and own code, complained about the dimension of the y_train variable. After turning the regression flag off, the network performed as expected.

Hi, I have tweeted to you yesterday and you told me to explain the problem in here.

I use Ubuntu 14.04 LTS, Python 2.7.11 |Anaconda 2.4.1 (64-bit) and I use Spyder. All of the libraries, which I use, have been updated and upgraded to the newest versions. For example I have changed the line from lasagne.objectives import mse to from lasagne.objectives import squared_error

The errors I have confronted are:

1) from nolearn.lasagne import visualize

line gives the error:

Traceback (most recent call last):

File “”, line 1, in

ImportError: cannot import name visualize

2) nn = net1.fit(X_train, y_train)

line gives the error:

Traceback (most recent call last):

File “”, line 1, in

File “/usr/local/lib/python2.7/dist-packages/nolearn/lasagne.py”, line 140, in fit

self._print_layer_info(self.get_all_layers())

File “/usr/local/lib/python2.7/dist-packages/nolearn/lasagne.py”, line 376, in _print_layer_info

output_shape = layer.get_output_shape()

AttributeError: ‘DenseLayer’ object has no attribute ‘get_output_shape’

3) preds = net1.predict(X_test)

line gives the error:

Traceback (most recent call last):

File “”, line 1, in

File “/usr/local/lib/python2.7/dist-packages/nolearn/lasagne.py”, line 249, in predict

y_pred = np.argmax(self.predict_proba(X), axis=1)

File “/usr/local/lib/python2.7/dist-packages/nolearn/lasagne.py”, line 242, in predict_proba

probas.append(self.predict_iter_(Xb))

AttributeError: ‘NeuralNet’ object has no attribute ‘predict_iter_’

4) dense_layer = layers.get_output(net1.layers_[‘dense’], deterministic=True)

output_layer = layers.get_output(net1.layers_[‘output’], deterministic=True)

input_var = net1.layers_[‘input’].input_var

dense_layer = layers.get_output(net1.layers_[‘dense’], deterministic=True)

output_layer = layers.get_output(net1.layers_[‘output’], deterministic=True)

input_var = net1.layers_[‘input’].input_var

these 6 lines give the same errors:

Traceback (most recent call last):

File “”, line 1, in

AttributeError: ‘NeuralNet’ object has no attribute ‘layers_’

I am waiting for your help impatiently. Thanks for your time.

Sincerely Yours

Hey i want to visualize the output of convolutional layer. I have used the command visualize.plot_conv_weights(), But its not showing anything. Can somebody help me for that?

and i also want to know :

biases on every layers

the datetype of input file

the best weight of datalose(could be used to use)

Hi All

I am trying convolution neural networks for a predictive model on a time series data. So I have been looking at your code in http://blog.christianperone.com/2015/08/convolutional-neural-networks-and-feature-extraction-with-python/ . I wanted to know how would I configure the convolutional neural net for an input size of say 7 and output size of 1. I would appreciate your help.

Regards,

Joshua

because some data was used to train the model(find best weights,biases),the output is a action or flag or many….

Nice man. Thank you for the great tutorial. I’m new on CNN and trying to implement in python. I’m having a hard time to configure my GPU with openCL. But, it is a other history. Do you know some references to create a database like the mnist. I have some images to create a data set, but i’m lost in this task. Thanks in advance

Use lmdb database.. It will be easier to use the database if you know how to work with caffe deep learning library. You also have to hack you way on how to apply the lmdb database to work with lasagne. A simple google search will get you started

I want to do the handwriting recognition of digits trained using MNIST digits.

Normally, people extract the HOG features from the image and then train it using SVM. And during prediction time, HOG feature is extracted from the real image and then the prediction is made.

But, I want to do the same thing using convolutional network you mentioned in your blog. The accuracy of 98.5 % is amazing, given that computation is less intense compared to other complicated and computationally intense network.I am currently serializing the ‘net1’ object by pickle. What I don’t know is what kind of feature to extract from the real image and feed it to classifier to make the prediction.

Partho, I’m trying to send you an email but it always return an error saying that your mail is blocked. Do you have any other mail besides the one ending in @asu.edu ? You can answer here, I’ll remove the comment after seeing it to avoid spam to your mailbox.

Sir,I want to make real time object tracking and machine learning in object recognition over drone,how can i implement it.

I want to use the features extracted from the CNN code and train it using the SVM classifier as you have mentioned at the end. What i am not understanding is what should be the fed to the classifier. Can you please help me?

Is the choice of 32 filters arbitrary or is it based on a formula or heuristic of some sort?

Hi,

Thank you very much for the article.

Ive got a simple question to ask. I want to perturb the weights with some percent error and then I want to use the network with the perturbed weight on the same data to compare the results.

Any one willing to help?

BTW I’m pretty new to python and all these libraries.

THanks for writing this article. I really liked where you hinted how to put an SVM on top of a deep network (VGG16) by using Theano. Medical images are what I am going to try this technique on. There are very few examples (about 300) in my medical image dataset. I think your suggestions will help a lot with it.

Thank you so much for your tutorial. i’ve been tried your tutorial with my dataset (digit and alphabet) for 82800 instances but when i try to use the f_dense for feature extraction, it’s give me some error follow:

if i run

pred = f_dense(input)

MemoryError Traceback (most recent call last)

in ()

—-> 1 pred = f_dense(X_test)

/srv/Python-DL/py-env/local/lib/python2.7/site-packages/theano/compile/function_module.pyc in __call__(self, *args, **kwargs)

869 node=self.fn.nodes[self.fn.position_of_error],

870 thunk=thunk,

–> 871 storage_map=getattr(self.fn, ‘storage_map’, None))

872 else:

873 # old-style linkers raise their own exceptions

/srv/Python-DL/py-env/local/lib/python2.7/site-packages/theano/gof/link.pyc in raise_with_op(node, thunk, exc_info, storage_map)

312 # extra long error message in that case.

313 pass

–> 314 reraise(exc_type, exc_value, exc_trace)

315

316

/srv/Python-DL/py-env/local/lib/python2.7/site-packages/theano/compile/function_module.pyc in __call__(self, *args, **kwargs)

857 t0_fn = time.time()

858 try:

–> 859 outputs = self.fn()

860 except Exception:

861 if hasattr(self.fn, ‘position_of_error’):

MemoryError: Error allocating 368640000 bytes of device memory (CNMEM_STATUS_OUT_OF_MEMORY).

Apply node that caused the error: GpuAllocEmpty(Shape_i{0}.0, Shape_i{0}.0, Elemwise{Composite{((((i0 + i1) – i2) // i3) + i3)}}[(0, 0)].0, Elemwise{Composite{((((i0 + i1) – i2) // i3) + i3)}}[(0, 0)].0)

Toposort index: 30

Inputs types: [TensorType(int64, scalar), TensorType(int64, scalar), TensorType(int64, scalar), TensorType(int64, scalar)]

Inputs shapes: [(), (), (), ()]

Inputs strides: [(), (), (), ()]

Inputs values: [array(5000), array(32), array(24), array(24)]

Outputs clients: [[GpuDnnConv{algo=’small’, inplace=True}(GpuContiguous.0, GpuContiguous.0, GpuAllocEmpty.0, GpuDnnConvDesc{border_mode=’valid’, subsample=(1, 1), conv_mode=’conv’, precision=’float32′}.0, Constant{1.0}, Constant{0.0})]]

HINT: Re-running with most Theano optimization disabled could give you a back-trace of when this node was created. This can be done with by setting the Theano flag ‘optimizer=fast_compile’. If that does not work, Theano optimizations can be disabled with ‘optimizer=None’.

HINT: Use the Theano flag ‘exception_verbosity=high’ for a debugprint and storage map footprint of this apply node.

help me please.

when i am running this program i am gettin an error

Traceback (most recent call last):

File “D:\Anaconda\Lib\site-packages\pythonwin\pywin\framework\scriptutils.py”, line 326, in RunScript

exec(codeObject, __main__.__dict__)

File “D:\Anaconda\Lib\idlelib\programs\p1.py”, line 79, in

nn=net1.fit(X_train,y_train)

NameError: name ‘X_train’ is not defined

plz give me ur suggestion on it

Hello,

Thanks for the article. I would like to train on a dataset of images that I create. I have converted the images to np array and also changed the paramaters of the net to reflect the size of the input layer accepted (in my case 100×100 images).

Yet I get errors such as IndexError: index 10 is out of bounds for axis 0 with size 10

Apply node that caused the error: CrossentropySoftmaxArgmax1HotWithBias(Dot22.0, output.b, y_batch)

Is there any tutorial maybe I can follow on how to build this dataset?

Thanks

Hi Christian,

Thanks for your wonderful article. I was trying your code. But while “training the model”, it’s running for eternity. I’m not getting any error which I can debug. Can you please suggest what can be the issue? I’m using Windows 10 with 4 GB Ram.

Regards,

Arijit

Hi Arijit,

Can you please tell which current verions of nolearn, lasagne and theano have you installed in your terminal ?

Hey Vibhor,

I am getting the same problems as Arijit

It seems the RAM gets maxed out no matter how much is available (I have 16GB) which is good for the first output but then the program struggles to find the RAM needed (because it’s already used most of it?)

Versions of packages:

nolearn = 0.6 py27_0

lasagne = 0.1 py27_0

theano = 0.9 py27_0

good evening,

I tried to run and it results in error:

ImportError: cannot import name ‘urlretrieve’

Can someone help me?

Hi, you’re probably using Python 3 instead of Python 2.

Si. Yo lo use en python 3 elimine urlretrieve .

Hi Christian,

How to specify Activation function ‘RELU’ in NueralNet function ?

Thank you,

Siva

How can I save the trained model and convoNet for further training and testing purpose

abhie: How can I save the trained model —> pickle

Hello.

I don’t understand why

— ——– ——–

0 input 1x28x28

1 conv2d1 32x24x24 —> why 24×24?

Regards

The 24×24 is the feature map output size that is the result of applying a 7×7 filter with stride 1 over a 28×28 input image. Take a look on http://cs231n.github.io/convolutional-networks/ for more information if you are still in doubt. Thanks !

eso es por que aplico filtro de 5×5. Al aplicar el filtro se pierden 2 pixeles alrededor del borde en cada borde. Entonces en total se pierden 4 pixeles –> 28 –>24 –> las imagenes quedan de 24×24. 32 es la profundidad ( la cantidad de filtros que tenes)

Hello! Thank you for this tutorial! As I attempted to follow it, I got into some issues I could not resolve. Specifically, when I tried to import NeuralNet from nolearn.lasagne I got a cascade of errors:

File “”, line 1, in

from nolearn.lasagne import NeuralNet

File “/Applications/PyCharm CE.app/Contents/helpers/pydev/_pydev_bundle/pydev_import_hook.py”, line 21, in do_import

module = self._system_import(name, *args, **kwargs)

File “/Users/2128506mj/anaconda/envs/py27/lib/python2.7/site-packages/nolearn/lasagne/__init__.py”, line 1, in

from .handlers import (

File “/Applications/PyCharm CE.app/Contents/helpers/pydev/_pydev_bundle/pydev_import_hook.py”, line 21, in do_import

module = self._system_import(name, *args, **kwargs)

File “/Users/2128506mj/anaconda/envs/py27/lib/python2.7/site-packages/nolearn/lasagne/handlers.py”, line 12, in

from .util import ansi

File “/Applications/PyCharm CE.app/Contents/helpers/pydev/_pydev_bundle/pydev_import_hook.py”, line 21, in do_import

module = self._system_import(name, *args, **kwargs)

File “/Users/2128506mj/anaconda/envs/py27/lib/python2.7/site-packages/nolearn/lasagne/util.py”, line 13, in

from lasagne.layers.cuda_convnet import Conv2DCCLayer

File “/Applications/PyCharm CE.app/Contents/helpers/pydev/_pydev_bundle/pydev_import_hook.py”, line 21, in do_import

module = self._system_import(name, *args, **kwargs)

File “/Users/2128506mj/anaconda/envs/py27/lib/python2.7/site-packages/lasagne/layers/cuda_convnet.py”, line 16, in

from theano.sandbox.cuda.basic_ops import gpu_contiguous

File “/Applications/PyCharm CE.app/Contents/helpers/pydev/_pydev_bundle/pydev_import_hook.py”, line 21, in do_import

module = self._system_import(name, *args, **kwargs)

File “/Users/2128506mj/anaconda/envs/py27/lib/python2.7/site-packages/theano/sandbox/cuda/__init__.py”, line 6, in

“You are importing theano.sandbox.cuda. This is the old GPU back-end and ”

SkipTest: You are importing theano.sandbox.cuda. This is the old GPU back-end and is removed from Theano. Use Theano 0.9 to use it. Even better, transition to the new GPU back-end! See https://github.com/Theano/Theano/wiki/Converting-to-the-new-gpu-back-end%28gpuarray%29

I have a Macbook Pro with OS X 10.10. It has Intel graphics chip, so I haven’t installed CUDA. Other features: virtual environment with Python 2.7.13 |Anaconda 4.4.0 (x86_64), PyCharm Community version 2017.2

I tried reinstalling and updating Theano and Lasagne, as well as pygpu, of which all I now have the latest versions (Lasagne (0.2.dev1), Theano (0.10.0.dev1),pygpu (0.6.8))

I’ve read this could be a Theano issue, so I configured Theano to use CPU with force_device = True, but it did not help. This is probably a lasagne issue, as it makes calls for theano.sandbox.cuda.

Is this possible to configure the setup to run without CUDA. How exactly can I do this? Any advice is much appreciated!

Cheers,

Ivan

Hai christian,

Thank you for your article it’s help me a lot. I have question, it’s work for video feature extraction? If not which part should i change? Thank you.

Best regards

Manggala

I ask how to adapt a code to work with other dataset?

sir,

what should i do if i want to extract the features from a newdataset?

Could you please list the versions of the packages with which the code run with no errors.

I’m getting many errors with:

Lasagne: 0.1

Theano: 1.0.1

due to the change of pooling

Thanks

I have the same request:)

You’re tutorial is the best. All of the other tutorials are complete crap. I CAN ACTUALLY READ YOUR TUTORIAL WITHOUT GETTING STUCK ON STUPID QUESTIONS!!! THANK YOU!!!

Is there a way to determine the memory required to train or test a deep learning model written using theano and lasagne

Hi Christian,

You’ve done a great job. But since Theano has been completely depreciated, which causes lot of problems to be compatible with Nolearn and Lasagna.

Is it possible that you can come up with a version of code using Tensorflow instead?

Cheers,

Bridget

Thank for your writing,it’s useful for me! And I got some errors.Can you help me?

from nolearn.lasagne import NeuralNet

NameError: name ‘NeuralNet’ is not defined